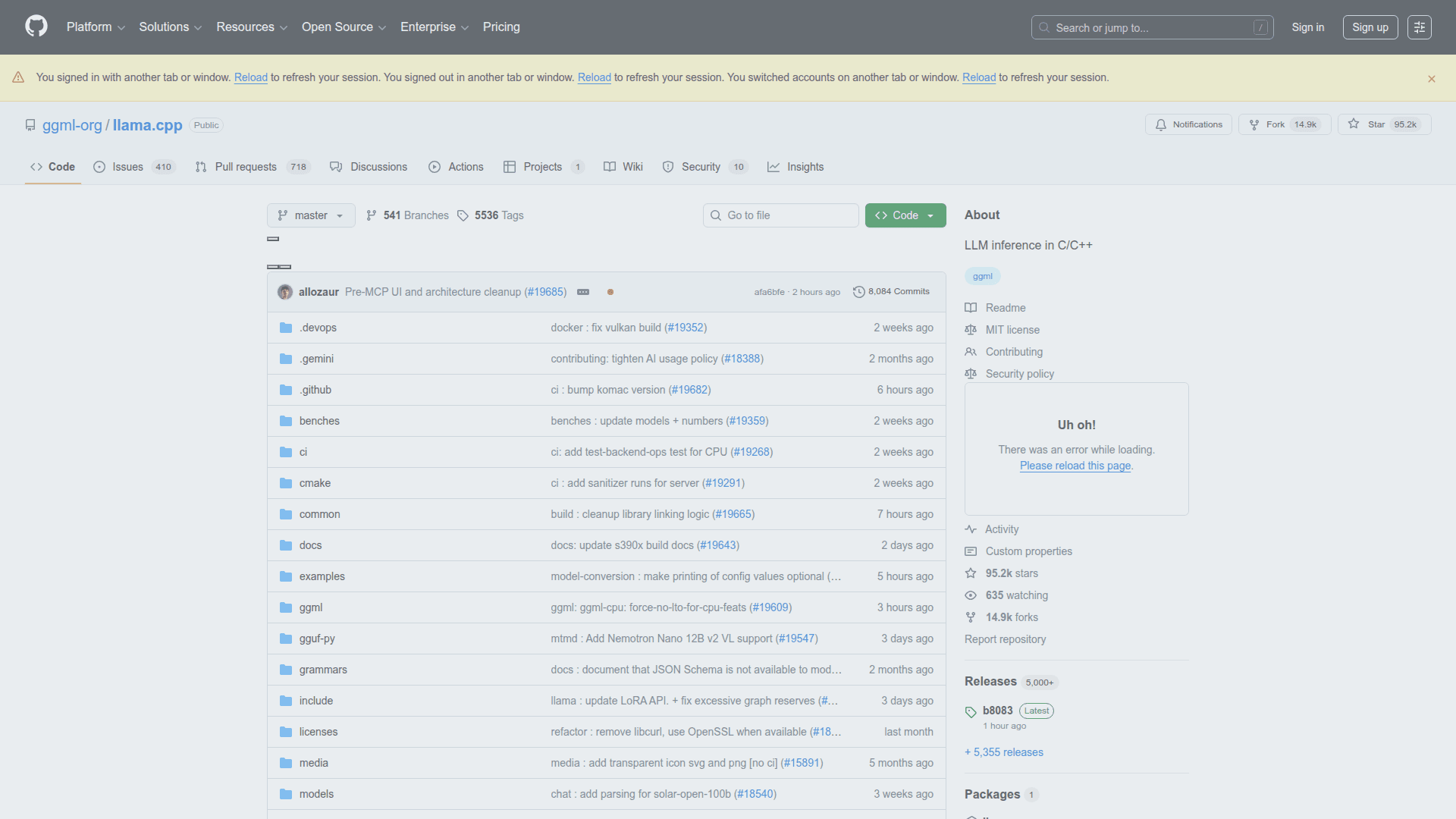

llama.cpp

LLM inference in C/C++ enabling efficient local execution of large language models across various hardware platforms.

At a Glance

Pricing

Free and open source under MIT license

Engagement

Available On

About llama.cpp

llama.cpp is a high-performance C/C++ library for running large language model (LLM) inference locally on a wide variety of hardware. Originally developed to enable running Meta's LLaMA models on consumer hardware, it has evolved into a comprehensive framework supporting numerous model architectures and quantization formats. The project prioritizes efficiency, portability, and minimal dependencies, making it ideal for developers who want to deploy LLMs without relying on cloud services.

-

Pure C/C++ Implementation provides a lightweight, dependency-free codebase that compiles easily across platforms without requiring heavy frameworks like PyTorch or TensorFlow.

-

Extensive Quantization Support enables running large models on limited hardware through various quantization methods (4-bit, 5-bit, 8-bit), dramatically reducing memory requirements while maintaining reasonable quality.

-

Multi-Platform Hardware Acceleration supports CUDA, Metal, OpenCL, Vulkan, and CPU-optimized SIMD instructions, allowing optimal performance on NVIDIA GPUs, Apple Silicon, AMD GPUs, and modern CPUs.

-

Model Format Compatibility works with GGUF format and supports conversion from various model formats, enabling use of models from Hugging Face and other sources.

-

Server Mode includes a built-in HTTP server with OpenAI-compatible API endpoints, making it easy to integrate into existing applications and workflows.

-

Active Community Development benefits from rapid iteration and contributions from a large open-source community, with frequent updates adding support for new models and optimizations.

To get started, clone the repository, build using CMake with your preferred backend (CPU, CUDA, Metal, etc.), download a GGUF-format model, and run inference using the provided command-line tools or server. The project includes comprehensive documentation covering build options, model conversion, and API usage.

Community Discussions

Be the first to start a conversation about llama.cpp

Share your experience with llama.cpp, ask questions, or help others learn from your insights.

Pricing

Open Source

Free and open source under MIT license

- Full source code access

- All features included

- Community support

- MIT License

Capabilities

Key Features

- Pure C/C++ implementation with no dependencies

- 4-bit, 5-bit, and 8-bit quantization support

- CUDA GPU acceleration for NVIDIA GPUs

- Metal acceleration for Apple Silicon

- Vulkan and OpenCL support

- CPU SIMD optimizations (AVX, AVX2, AVX512)

- GGUF model format support

- Built-in HTTP server with OpenAI-compatible API

- Model conversion tools

- Batch processing support

- KV cache quantization

- Speculative decoding

- Grammar-based sampling

- Multi-modal model support

- Cross-platform compatibility