Stanford CS224N: Natural Language Processing with Deep Learning

Stanford's graduate-level course covering natural language processing with deep learning methods, including word vectors, neural networks, and transformers.

At a Glance

Pricing

Free public access to all course materials

Engagement

Available On

About Stanford CS224N: Natural Language Processing with Deep Learning

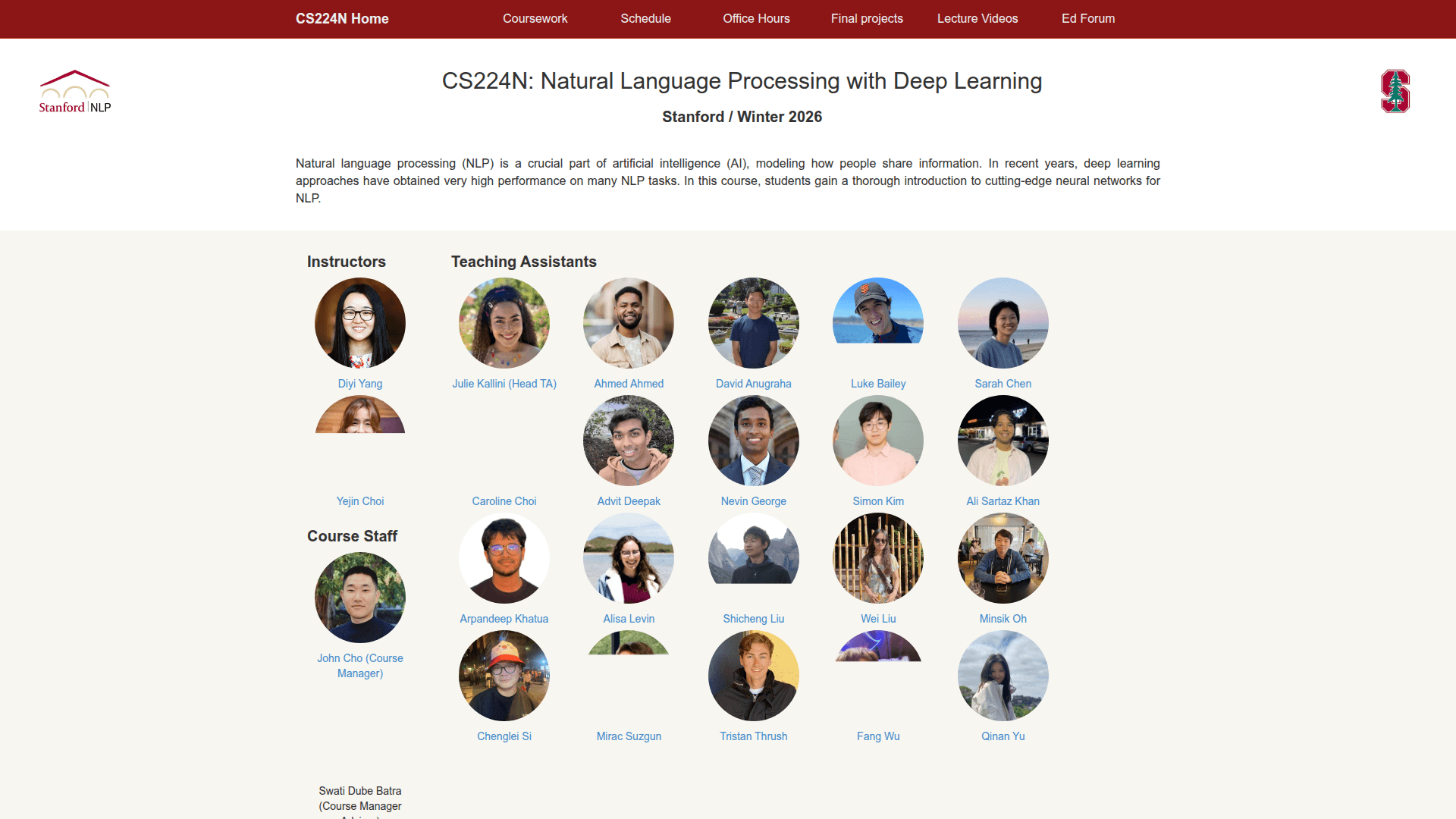

Stanford CS224N is a comprehensive graduate-level course that teaches the foundations and cutting-edge techniques in natural language processing (NLP) using deep learning. The course covers everything from basic word vector representations to advanced transformer architectures and large language models. Students learn both theoretical foundations and practical implementation skills through lectures, assignments, and a final project.

-

Word Vectors and Embeddings - Learn how to represent words as dense vectors using techniques like Word2Vec and GloVe, understanding the mathematical foundations behind distributed representations.

-

Neural Network Fundamentals - Master backpropagation, dependency parsing, and recurrent neural networks (RNNs) including LSTMs and GRUs for sequence modeling tasks.

-

Attention and Transformers - Deep dive into attention mechanisms and the transformer architecture that powers modern NLP systems like BERT and GPT.

-

Pretraining and Fine-tuning - Understand how large language models are pretrained on massive corpora and fine-tuned for specific downstream tasks.

-

Question Answering and NLG - Explore practical applications including question answering systems, machine translation, and natural language generation.

-

Prompting and RLHF - Learn modern techniques for working with large language models including prompt engineering and reinforcement learning from human feedback.

-

Hands-on Assignments - Complete programming assignments in PyTorch covering word vectors, neural dependency parsing, neural machine translation, and self-attention mechanisms.

-

Final Project - Apply learned concepts to a substantial NLP project, with options for default projects or custom research proposals.

To get started, students should have proficiency in Python programming, familiarity with calculus and linear algebra, and basic knowledge of probability and statistics. The course materials including lecture slides, videos, and assignments are publicly available on the course website. The course is taught by renowned NLP researchers including Christopher Manning and provides an excellent foundation for anyone looking to work in natural language processing or AI research.

Community Discussions

Be the first to start a conversation about Stanford CS224N: Natural Language Processing with Deep Learning

Share your experience with Stanford CS224N: Natural Language Processing with Deep Learning, ask questions, or help others learn from your insights.

Pricing

Free Plan Available

Free public access to all course materials

- Lecture videos

- Lecture slides

- Assignment materials

- Suggested readings

- Course schedule

Capabilities

Key Features

- Word vector representations (Word2Vec, GloVe)

- Neural network fundamentals for NLP

- Recurrent neural networks (RNNs, LSTMs, GRUs)

- Attention mechanisms and transformers

- Pretraining and fine-tuning large language models

- Question answering systems

- Machine translation

- Natural language generation

- Prompting and RLHF techniques

- Hands-on PyTorch assignments

- Final project with custom research options