Vals AI

AI evaluation platform for testing LLM applications with industry-specific benchmarks, automated test suites, and performance analytics for enterprise teams.

At a Glance

Pricing

Get started with Vals AI at no cost with Free version available.

Engagement

Available On

About Vals AI

Vals AI is a comprehensive evaluation platform designed specifically for testing and benchmarking large language model (LLM) applications including copilots, RAG systems, and AI agents. The platform addresses critical gaps in AI evaluation by providing industry-specific benchmarks that reflect real-world use cases rather than academic datasets.

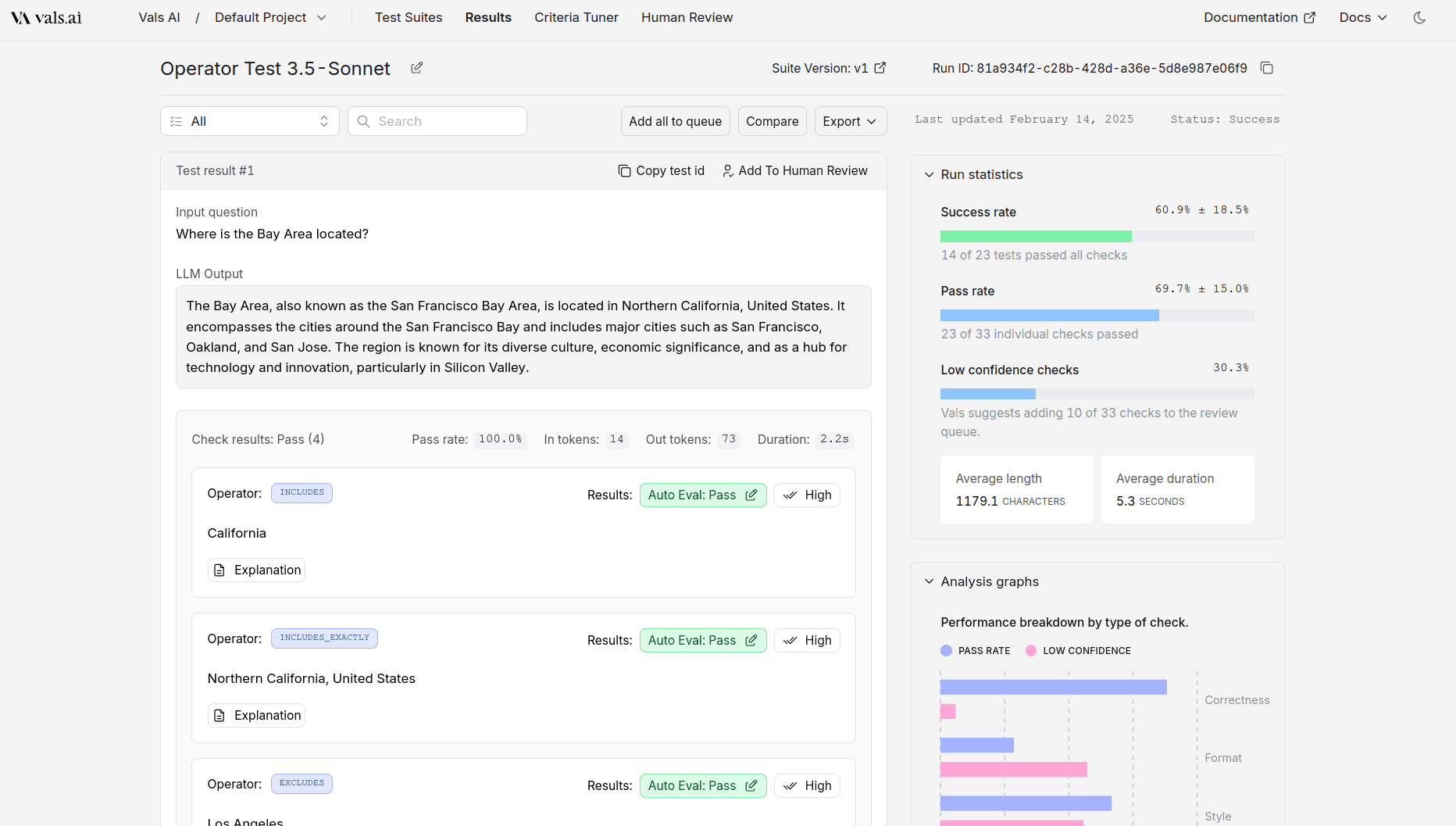

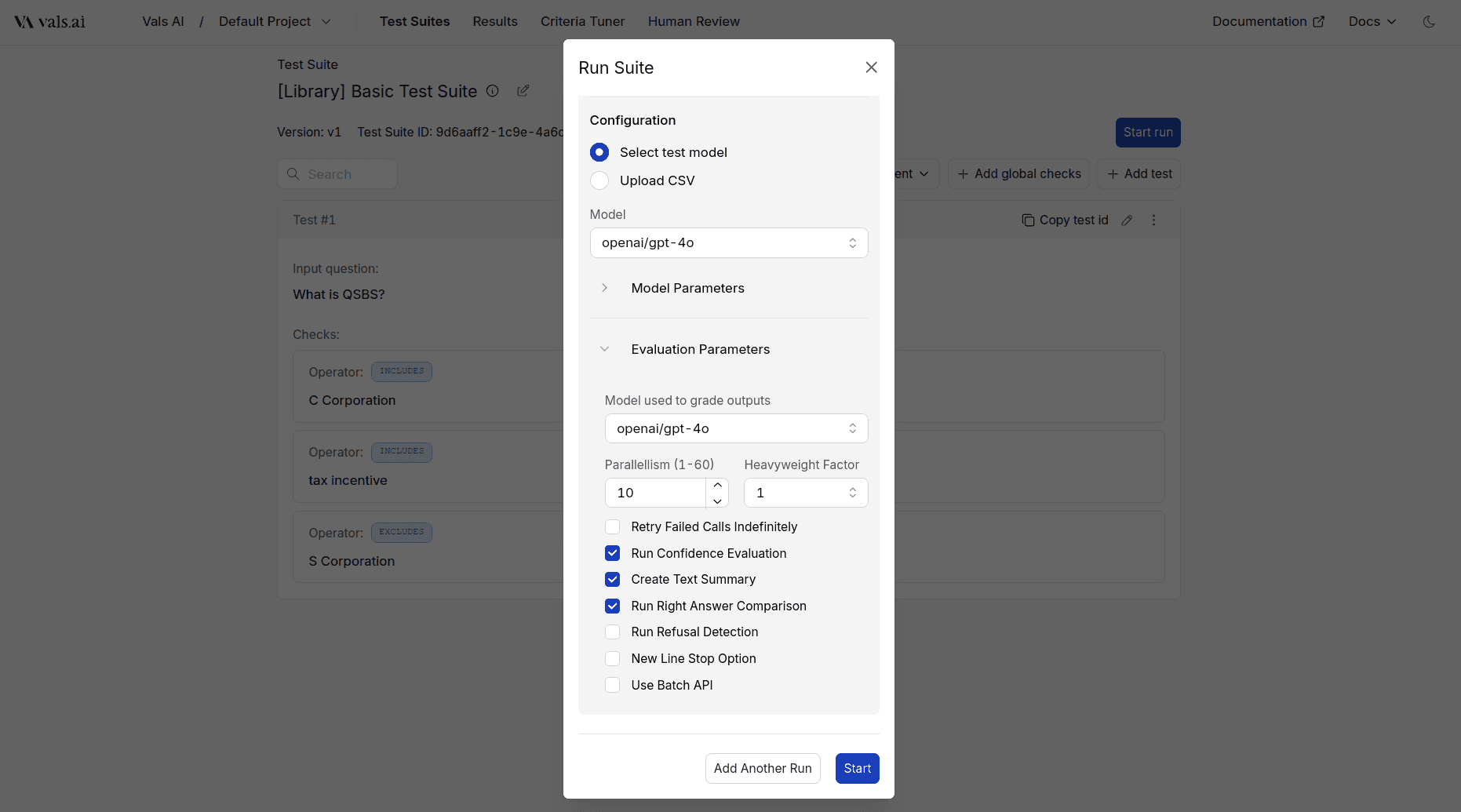

At its core, Vals AI uses Test Suites composed of multiple Tests, each with specific inputs and Checks that evaluate whether model responses meet defined expectations. This structured approach enables systematic evaluation of AI applications across domains like Legal, Finance, Healthcare, Mathematics, and Coding.

The platform offers both private benchmarking capabilities to prevent data leakage and public benchmark resources. Their public benchmarks (available at vals.ai/benchmarks) provide valuable free resources for model comparison across categories like Legal (CaseLaw, ContractLaw, LegalBench), Finance (CorpFin, Finance Agent, TaxEval), Healthcare (MedQA), Math (AIME, MGSM), Academic (GPQA, MMLU Pro), and Coding LiveCodeBench, SWE-bench.

Vals AI integrates seamlessly into development workflows through SDK and CLI tools, enabling automated testing, CI/CD pipeline integration, and regression testing. The platform also supports expert-in-the-loop evaluation with review workflows and annotation capabilities, combining automated metrics with human expertise for comprehensive AI application assessment.

For enterprise teams building AI applications, Vals AI provides the infrastructure needed to ensure model performance, accuracy, and reliability before deployment, with detailed analytics on cost, latency, and quality metrics.

Community Discussions

Be the first to start a conversation about Vals AI

Share your experience with Vals AI, ask questions, or help others learn from your insights.

Pricing

Free Plan Available

Get started with Vals AI at no cost with Free version available.

- Free version available

Public Benchmarks

Public Benchmarks plan with Access to public benchmark results and Model comparison tools.

- Access to public benchmark results

- Model comparison tools

- Industry-specific benchmark insights

Enterprise Platform

Enterprise-grade solution with Custom evaluation platform access and Private benchmark creation and dedicated support.

- Custom evaluation platform access

- Private benchmark creation

- SDK and CLI tools

- CI/CD integrations

- Expert review workflows

- Custom pricing based on usage

Capabilities

Key Features

- Test suite creation and management for LLM applications

- Industry-specific benchmarks across Legal, Finance, Healthcare, Math, and Coding

- Private and secure evaluation to prevent dataset leakage

- SDK and CLI tools for automated testing workflows

- CI/CD pipeline integrations for regression testing

- Expert review and annotation workflows

- Real-time performance, cost, and latency analytics

- RAG system evaluation capabilities

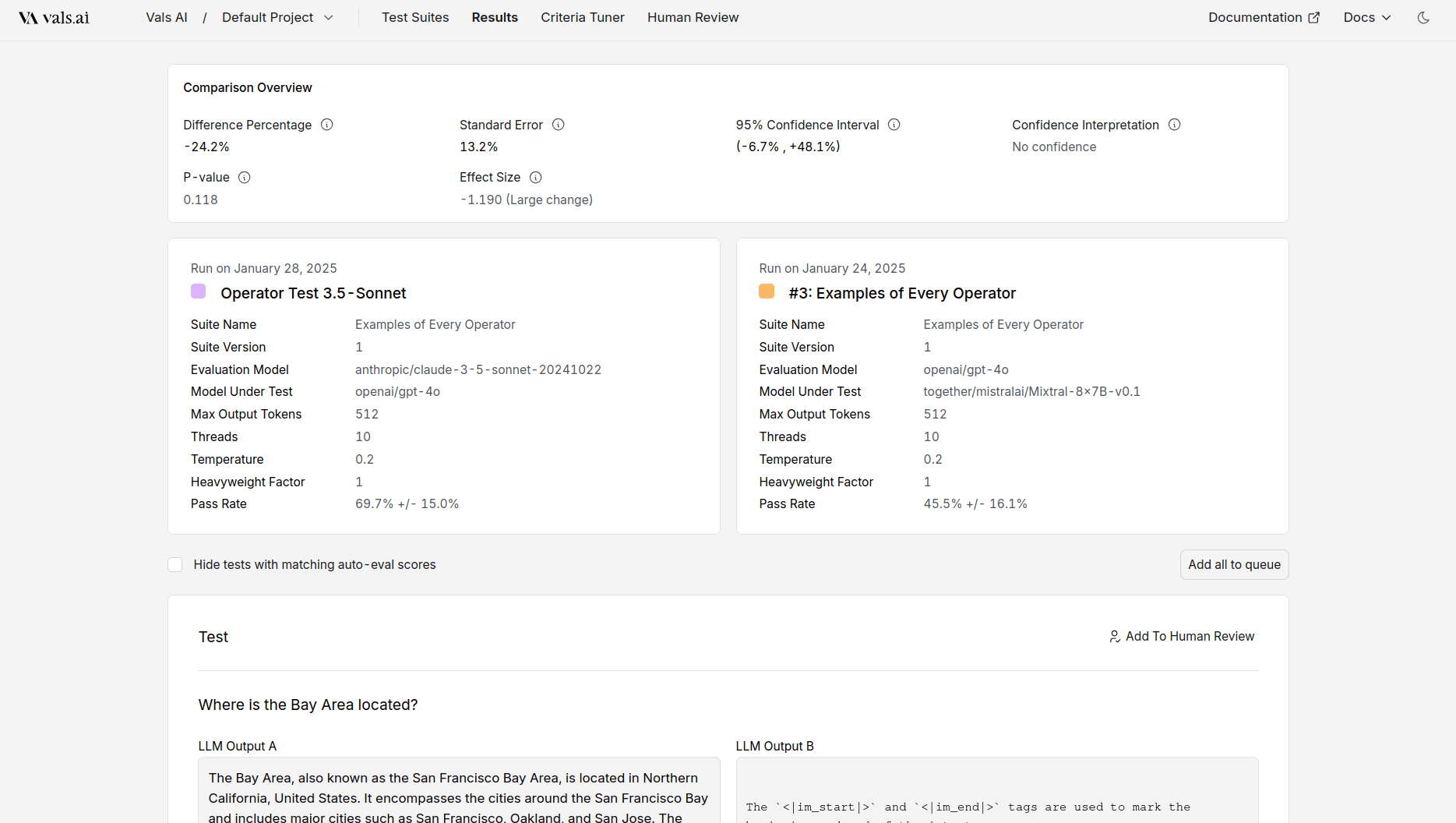

- Model comparison and ranking tools

- Custom benchmark creation for specific domains

- Public benchmark resources for model comparison

- Automated test case generation and validation

Integrations

Demo Video