Hugging Face, Inc.

To democratize good machine learning, one commit at a time. Hugging Face is building the world's largest open-source AI community and platform for machine learning models, datasets, and applications.

Founding Story

Hugging Face was founded in 2016 in New York City by three French entrepreneurs who met through an online Stanford engineering class and study group. They originally aimed to build a fun, open-domain conversational AI chatbot targeted at teenagers, named after the hugging face emoji. The pivotal moment came in late 2018 when Google released the BERT model. Hugging Face produced and open-sourced a PyTorch implementation within a week, which gained massive community traction. This success led the company to formally pivot in 2019 from the consumer chatbot to becoming an open-source hub and infrastructure platform for machine learning. The company was built on a philosophy of democratizing AI and making advanced ML accessible to everyone.

Discussions

Hugging Face: Democratizing NLP and Transformers

Hugging Face has become a central hub for NLP models and datasets. How are you leveraging their platform and tools in your projects?

Leadership

Founders

Clément Delangue

Co-founder and CEO. From La Bassée, Northern France. Previously worked at Moodstocks (acquired by Google), eBay, and co-founded VideoNot.es and UniShared. Studied at ESCP Business School with additional studies at Stanford University and Indian Institute of Management Bangalore. Has product and marketing expertise.

Julien Chaumond

Co-founder and CTO. Computer engineer and elite mathematician. Previously worked at France's Ministry of Economy and Finance. Co-founded Glose. Studied electrical engineering and computer science at Stanford University and École Polytechnique. Met the other founders through an online Stanford engineering class.

Thomas Wolf

Co-founder and Chief Science Officer (CSO). PhD in Statistical and Quantum Physics from Pierre and Marie Curie University. Former European Patent Attorney at Cabinet Plasseraud and research intern at Lawrence Berkeley National Laboratory. Trained scientist turned patent lawyer who transitioned to AI/ML.

Executive Team

Clément Delangue

Co-Founder and CEO

ESCP Business School, Stanford University, Indian Institute of Management Bangalore. Previously at Moodstocks (Google), eBay. Co-founded VideoNot.es and UniShared.

Julien Chaumond

Co-Founder and CTO

Stanford University, École Polytechnique. Former advisor at French Ministry of Economy and Finance. Co-founder of Glose.

Business Model

Revenue Model

Freemium and usage-based business model with multiple revenue streams: (1) Subscription revenue from PRO accounts ($9/month) and per-user seat licenses from Team ($20/user/month) and Enterprise plans ($50+/user/month); (2) Consumption-based infrastructure (IaaS) revenue from on-demand compute usage for hosting ML applications (Spaces) and deploying production models (Inference Endpoints); (3) Storage revenue based on volume (per TB) and repository visibility; (4) Enterprise services including expert support, legal/compliance features, and personalized support.

Pricing Tiers

Central platform for exploring, experimenting, collaborating, and building ML technology. Includes model evaluation, dataset viewer, and Git-based collaboration.

10x private storage capacity, 20x included inference credits, 8x ZeroGPU quota with highest queue priority, Spaces Dev Mode, ZeroGPU Spaces hosting, ability to publish blog articles, dataset viewer for private datasets, and a Pro badge.

SSO/SAML support, storage regions selection, audit logs, resource groups for access control, repository usage analytics, auth policies, centralized token control, private dataset viewer. All members receive ZeroGPU and Inference Providers PRO benefits.

All Team plan benefits plus highest storage, bandwidth, and API rate limits, managed billing with annual commitments, legal and compliance processes, and personalized support.

Managed storage for ML models and datasets. Volume discounts available at 50TB+, 200TB+, and 500TB+ tiers.

On-demand compute for hosting ML applications. Ranges from CPU Basic (Free) to Nvidia A100 8x ($20/hour) and L40S 8x ($23.50/hour). ZeroGPU available for free with dynamic usage.

Dedicated deployment service across AWS, Azure, GCP. CPU instances ($0.01-$0.54/hour), Accelerator instances ($0.75-$12/hour), GPU instances ($0.50-$80/hour) supporting NVIDIA T4, L4, L40S, A10G, A100, H100, H200, B200.

Target Markets

- AI researchers and academics

- Machine learning engineers and data scientists

- Enterprise organizations (Fortune 500 companies)

- Startups and indie developers

- Healthcare and pharmaceuticals

- Finance and banking

- Natural language processing (NLP)

- Computer vision

- Audio and speech processing

- Text generation and chatbots

- Image generation and editing

- Sentiment analysis

- Intel

- Pfizer

- Bloomberg

- eBay

History & Milestones

Platform reached 2.4 million models and 50,000 participating organizations

Laid off 4% of staff (approximately 10 people from sales team)

Acquired Pollen Robotics for undisclosed amount

Reached 2,000 paying enterprise customers

Platform surpassed 2 million models

4 AI Tools by Hugging Face, Inc.

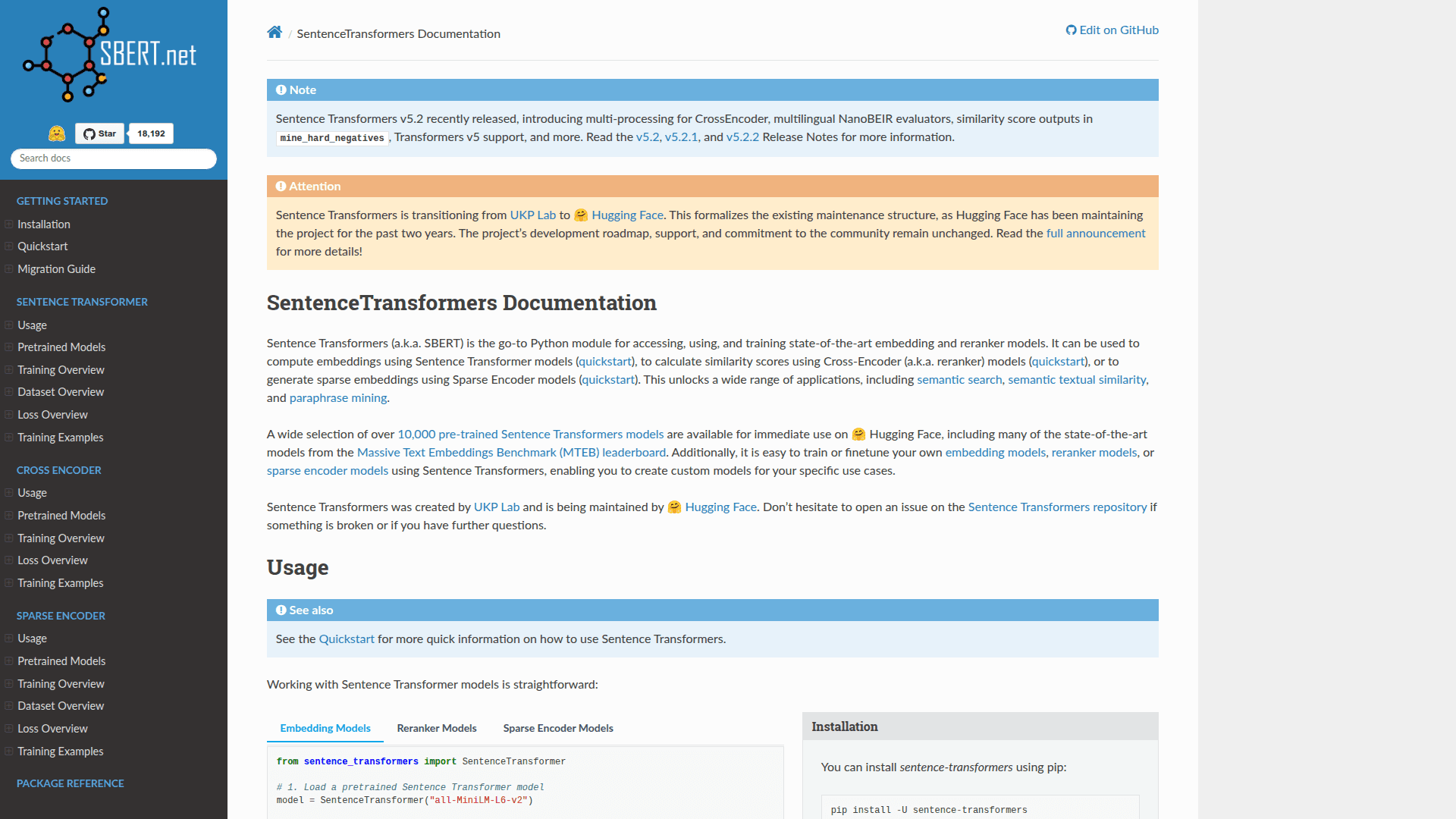

Python library for state-of-the-art sentence, text, and image embeddings using transformer models for semantic search and similarity.

Web-based chat interface by Hugging Face that lets users interact with and evaluate hosted language models through a browser-based conversational UI.

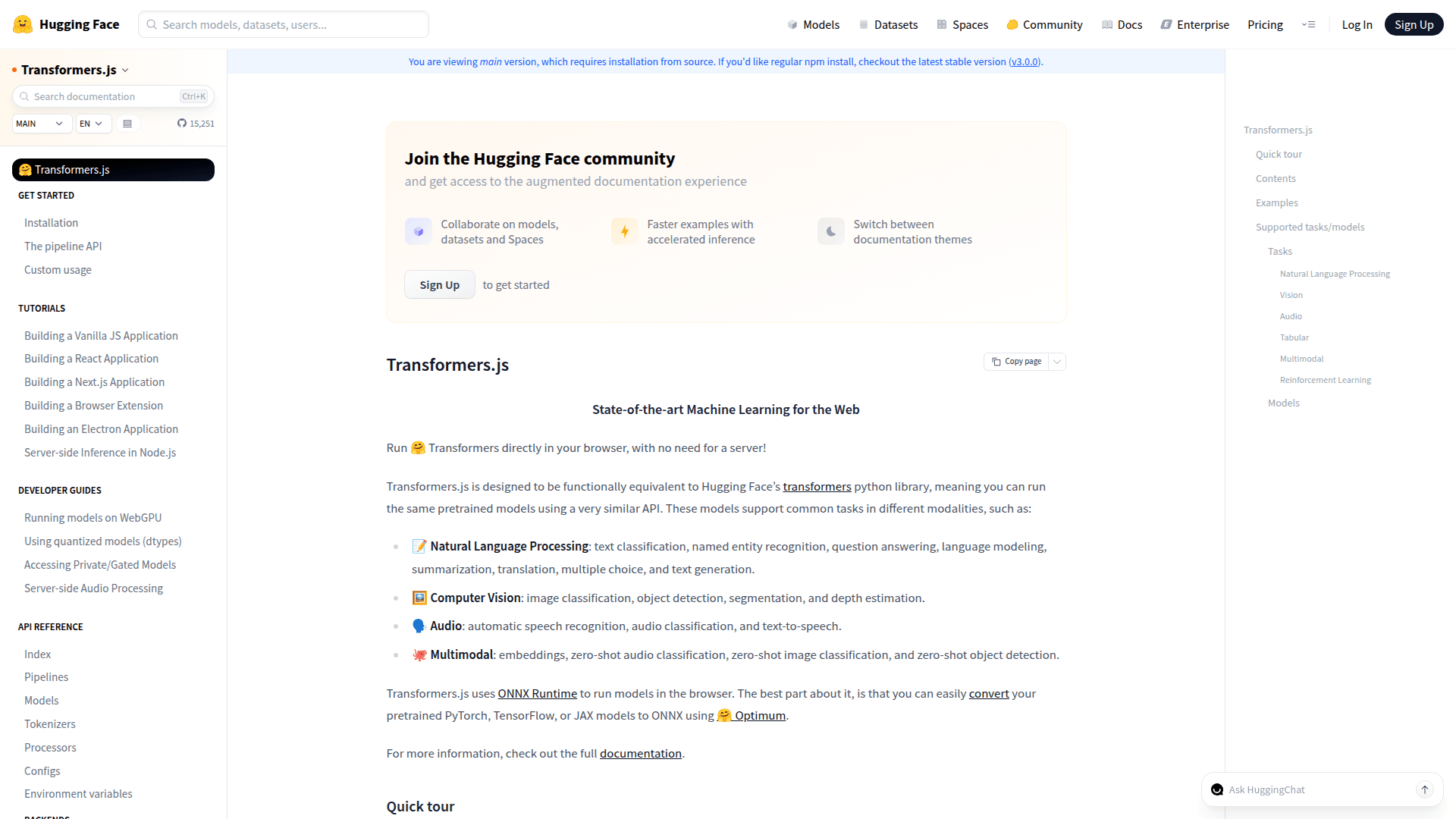

Run Hugging Face Transformers directly in your browser with no server required

Hugging Face

7moPlatform for accessing, sharing, and deploying machine learning models and datasets.