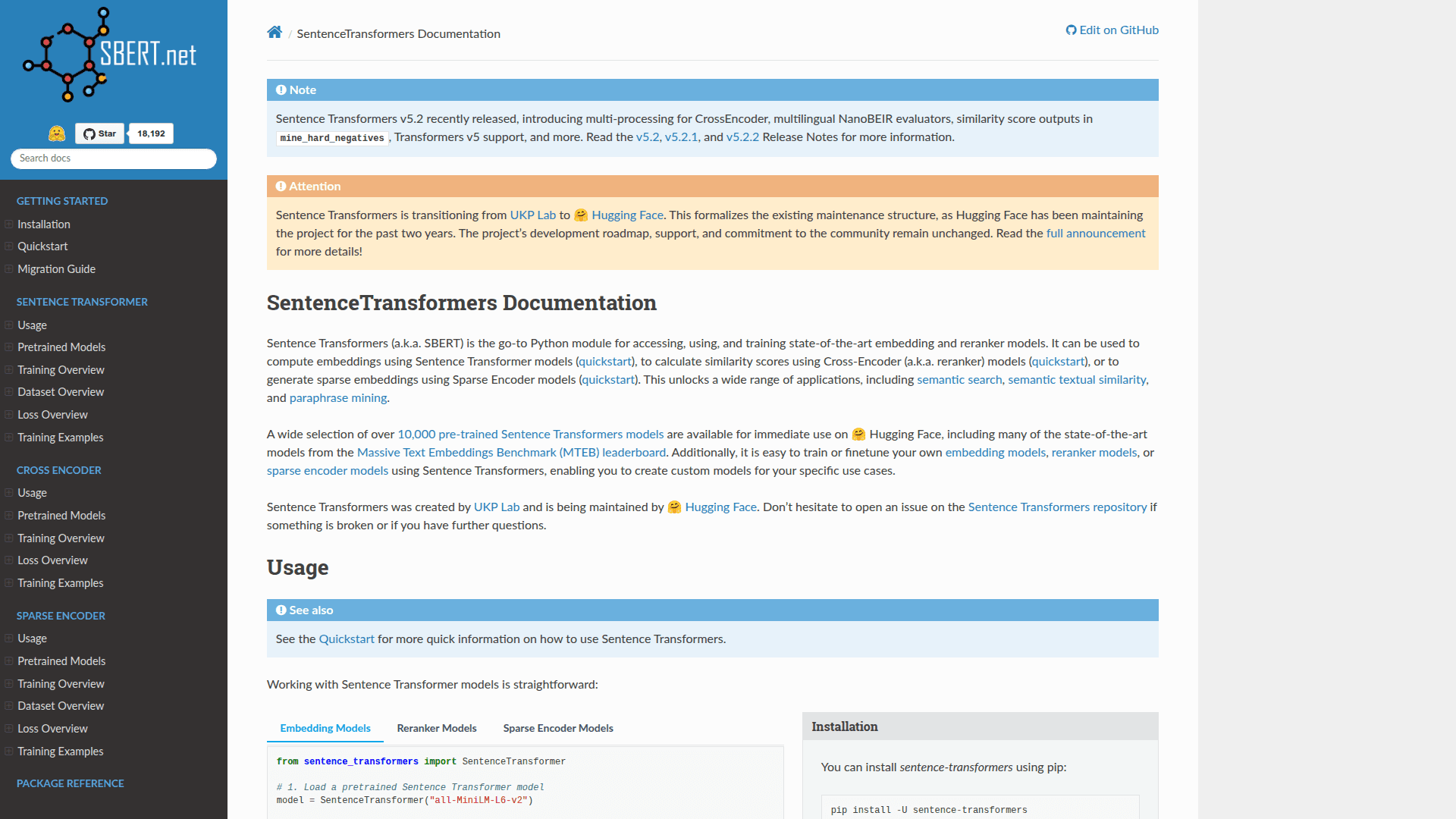

Sentence Transformers

Python library for state-of-the-art sentence, text, and image embeddings using transformer models for semantic search and similarity.

At a Glance

Pricing

Get started with Sentence Transformers at no cost with Full library access and 10,000+ pre-trained models.

Engagement

Available On

About Sentence Transformers

Sentence Transformers (SBERT) is the go-to Python module for accessing, using, and training state-of-the-art embedding and reranker models. It enables computing embeddings using Sentence Transformer models, calculating similarity scores using Cross-Encoder (reranker) models, and generating sparse embeddings using Sparse Encoder models. This unlocks a wide range of applications including semantic search, semantic textual similarity, and paraphrase mining. Originally created by UKP Lab and now maintained by Hugging Face, the library provides access to over 10,000 pre-trained models on Hugging Face Hub.

-

Sentence Transformer Models: Compute dense embeddings for sentences and texts using bi-encoder architecture, enabling fast similarity calculations through cosine similarity or dot product operations.

-

Cross-Encoder Models: Calculate precise similarity scores between text pairs using reranker models, ideal for re-ranking search results with higher accuracy than bi-encoders.

-

Sparse Encoder Models: Generate sparse embeddings using SPLADE and similar architectures, providing interpretable representations with vocabulary-sized dimensions.

-

Pre-trained Model Hub: Access over 10,000 pre-trained models on Hugging Face, including state-of-the-art models from the MTEB leaderboard for various embedding tasks.

-

Custom Model Training: Train or finetune your own embedding, reranker, or sparse encoder models using comprehensive training pipelines with multiple loss functions and evaluators.

-

Semantic Search: Build semantic search applications with optimized implementations supporting Elasticsearch, OpenSearch, Qdrant, and approximate nearest neighbor libraries.

-

Embedding Quantization: Reduce memory usage with binary and scalar (int8) quantization while maintaining search quality for large-scale deployments.

-

Multi-GPU Support: Scale inference and training across multiple GPUs with built-in multi-process encoding and distributed training capabilities.

-

ONNX and OpenVINO: Speed up inference by exporting models to ONNX format or using OpenVINO for optimized CPU inference.

To get started, install via pip with pip install -U sentence-transformers. Load a model with SentenceTransformer("all-MiniLM-L6-v2") and compute embeddings by calling model.encode(sentences). Calculate similarities using model.similarity(embeddings, embeddings). For reranking, use CrossEncoder class to score query-passage pairs.

Community Discussions

Be the first to start a conversation about Sentence Transformers

Share your experience with Sentence Transformers, ask questions, or help others learn from your insights.

Pricing

Free Plan Available

Get started with Sentence Transformers at no cost with Full library access and 10,000+ pre-trained models.

- Full library access

- 10,000+ pre-trained models

- Training and fine-tuning

- All encoder types (Sentence, Cross, Sparse)

- Community support

Capabilities

Key Features

- Sentence embedding generation

- Cross-encoder reranking

- Sparse encoder models

- Semantic search

- Semantic textual similarity

- Paraphrase mining

- Clustering

- Image search

- Embedding quantization

- Multi-GPU encoding

- ONNX export

- OpenVINO optimization

- Custom model training

- Knowledge distillation

- Multilingual models

- Matryoshka embeddings

- Hard negative mining

- MTEB evaluation