Edgee

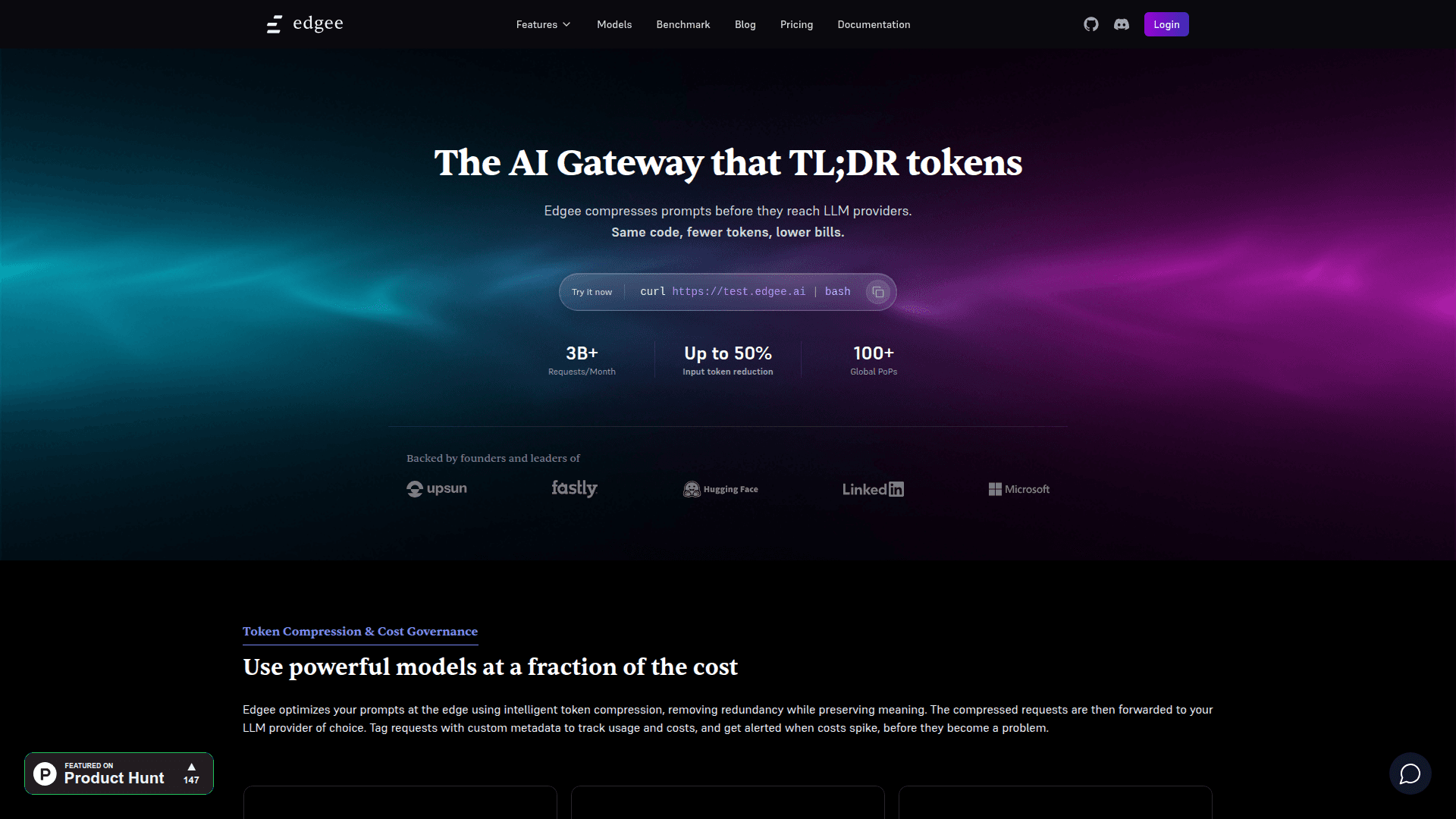

AI Gateway that compresses prompts before they reach LLM providers, reducing token usage by up to 50% while preserving semantic meaning.

At a Glance

Pricing

For teams shipping to production — from first launch to high-scale workloads. Start free with $5 credits after onboarding.

Engagement

Available On

About Edgee

Edgee is an AI Gateway that sits between your application and LLM providers, intelligently compressing prompts at the edge to reduce token usage by up to 50% without changing your application logic. It provides a single OpenAI-compatible API that works with over 200 models across providers like OpenAI, Anthropic, Gemini, xAI, and Mistral, enabling cost optimization and operational control for AI-powered applications.

Key Features:

- Token Compression - Automatically reduces prompt size by removing redundancy while preserving context and intent, particularly effective for long contexts, RAG payloads, and multi-turn agents

- Multi-Provider Gateway - Routes requests across 200+ models from major providers through a unified OpenAI-compatible API with automatic fallbacks and retries

- Bring Your Own Keys (BYOK) - Use Edgee's keys for convenience or plug in your own provider keys for billing control and access to custom models

- Cost Governance - Tag requests with custom metadata to track usage and costs by feature, team, or project, with alerts when spending spikes

- Observability - Monitor latency, errors, usage, and cost per model, per app, and per environment with activity logs and exports

- Edge Models - Run small, fast models at the edge to classify, redact, enrich, or route requests before they reach an LLM provider

- Edge Tools - Invoke shared tools managed by Edgee or deploy private tools at the edge for lower latency and tighter control

- Private Models - Deploy serverless open-source LLMs on demand and expose them through the same gateway API alongside public providers

- Universal Compatibility - Works with any LLM provider and normalizes responses across models for easy provider switching

- Global Infrastructure - Operates across 100+ global Points of Presence (PoPs) handling 3B+ requests per month

To get started, sign up for free with $5 credits after onboarding. No credit card is required. Integrate using the OpenAI-compatible API or native SDKs for TypeScript, Python, Go, and Rust. The platform is SOC 2 and GDPR compliant, making it suitable for enterprise production workloads.

Community Discussions

Be the first to start a conversation about Edgee

Share your experience with Edgee, ask questions, or help others learn from your insights.

Pricing

Free Plan Available

For teams shipping to production — from first launch to high-scale workloads. Start free with $5 credits after onboarding.

- OpenAI-compatible API + Chat & API access

- Multi-provider gateway (200+ models)

- Routing, fallbacks & retries

- Observability, logs & exports

- Budgets, cost attribution (tags) + usage tracking

Edge Models (Token Compression)

Token compression service - currently free, cost per token saved later

- Reduce token usage automatically

- Works across providers and models

- Designed for production reliability

- Track savings with built-in reporting

Edge Tools

Invoke shared tools or deploy private tools at the edge

- Cost per invocation

Private Models

Private Models hosted by Edgee

- Cost per minute hosted

Enterprise

Enterprise features including SSO/SAML and contractual SLA

- SSO / SAML

- Contractual SLA

Capabilities

Key Features

- Token compression with up to 50% input token reduction

- Multi-provider gateway with 200+ models

- OpenAI-compatible API

- Routing, fallbacks and retries

- Bring Your Own Keys (BYOK)

- Cost governance with tags and alerts

- Observability with usage, latency and error metrics

- Activity logs and exports

- Budgets and spend limits

- Edge Models for classification and routing

- Edge Tools deployment

- Private Models hosting

- Prompt caching

- Regional routing and pinning

- Data policy-based routing

- SSO/SAML support