LiteLLM

Open-source LLM gateway and Python SDK that unifies 100+ providers behind an OpenAI-compatible API with cost tracking, budgets, rate limits, and fallbacks.

At a Glance

Pricing

Get started with LiteLLM at no cost with 100+ provider integrations and OpenAI-compatible endpoints.

Engagement

Available On

About LiteLLM

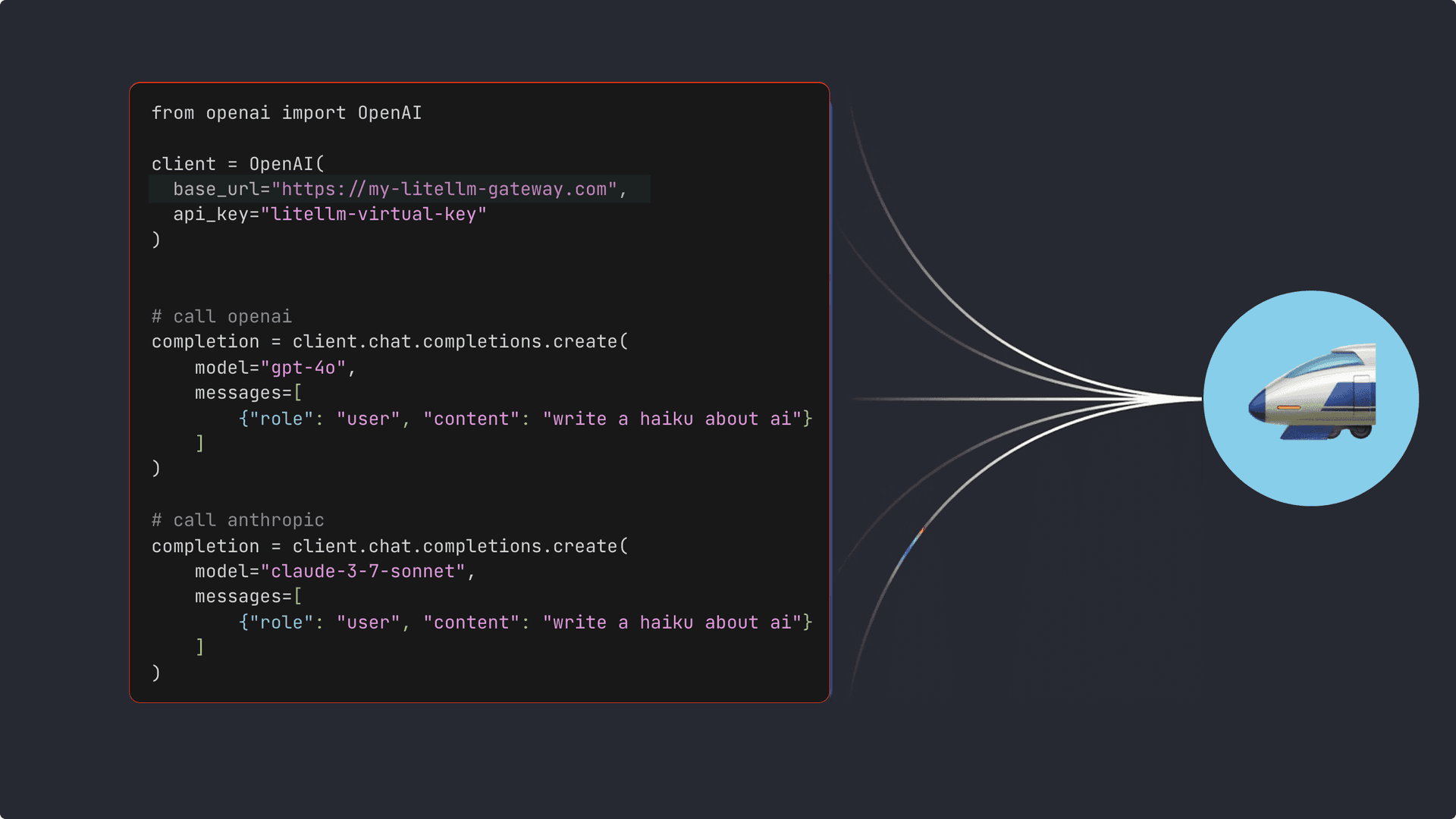

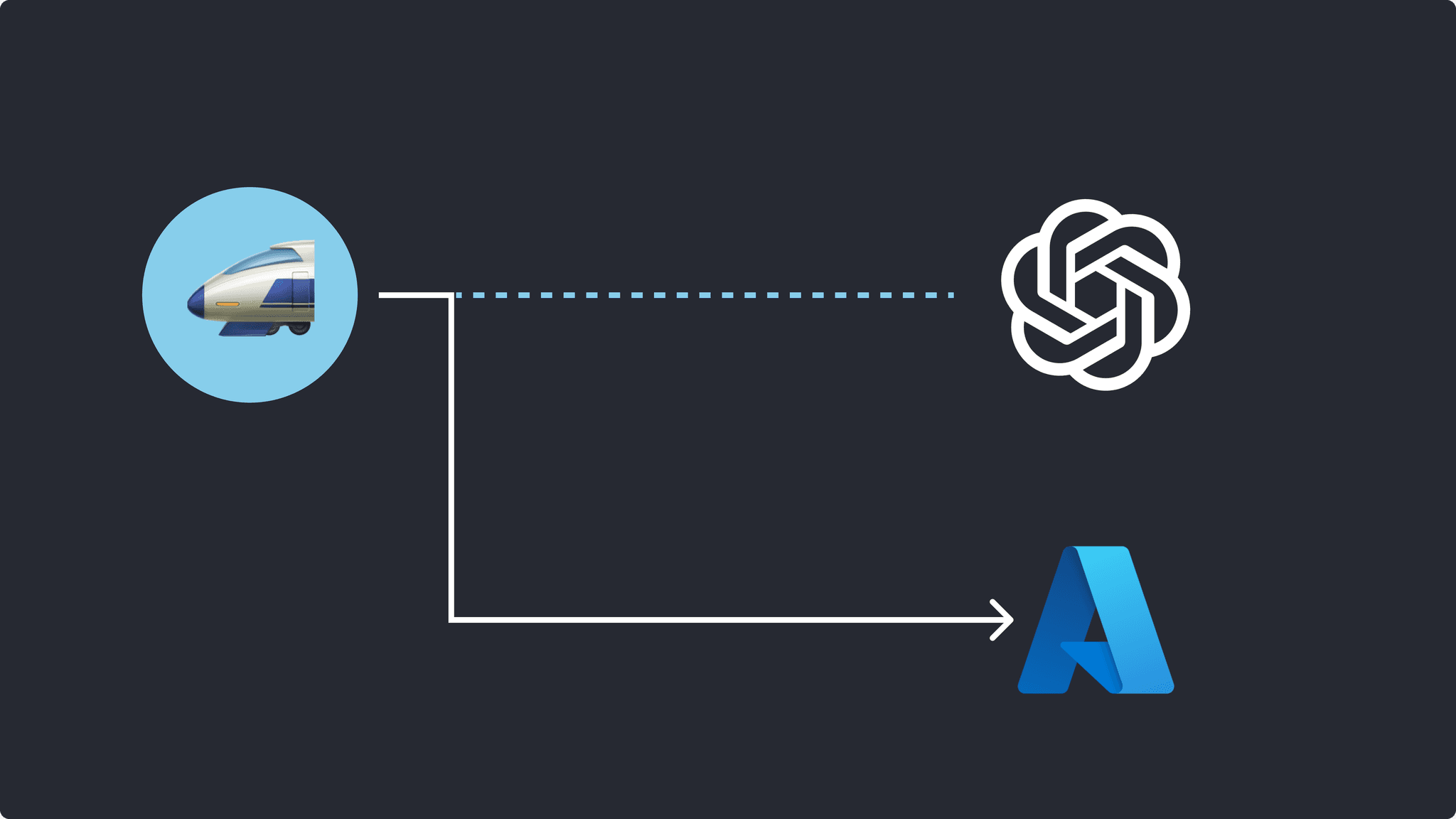

LiteLLM is an open-source LLM gateway (proxy server) and Python SDK that lets teams call 100+ model providers through the OpenAI API format. It adds platform features—load-balancing and fallbacks, spend tracking per key/user/team, budgets and RPM/TPM limits, virtual keys, and an admin UI. It integrates with observability stacks (Langfuse, LangSmith, OpenTelemetry, Prometheus) and supports logging to S3/GCS. Enterprise options layer on SSO/JWT auth and audit logs, plus fine-grained guardrails per project.

Community Discussions

Be the first to start a conversation about LiteLLM

Share your experience with LiteLLM, ask questions, or help others learn from your insights.

Pricing

Free Plan Available

Get started with LiteLLM at no cost with 100+ provider integrations and OpenAI-compatible endpoints.

- 100+ provider integrations

- OpenAI-compatible endpoints

- Virtual keys, teams, budgets

- Rate limits, load balancing, guardrails

- Observability integrations (Langfuse, LangSmith, OTEL, Prometheus)

Enterprise (Cloud or Self-Hosted)

Enterprise-grade solution with Everything in OSS and Enterprise support & custom SLAs and dedicated support.

- Everything in OSS

- Enterprise support & custom SLAs

- JWT/SSO, audit logs, advanced guardrails

- Usage-based pricing; contact sales

Capabilities

Key Features

- OpenAI-compatible API across 100+ LLM providers

- Proxy (LLM Gateway) with routing, load balancing, and fallbacks

- Python SDK for direct calls and streaming

- Cost tracking and spend attribution per key/user/team/org

- Budgets and rate limiting (RPM/TPM)

- Virtual keys, teams, and role/permission controls

- Admin UI for keys, models, teams, and budgets

- Observability: Langfuse, LangSmith, OpenTelemetry, Prometheus

- Audit logs, SSO/JWT auth (Enterprise)

- Guardrails and moderation integrations; per-project guardrails

- Batch API, caching, prompt formatting for HF models

- S3/GCS logging of requests and costs

Integrations

Demo Video